DBP2:Queens:PerkStation

[[Image:]]

Back to JHU DBP 2

Contents

PERK Station (Image overlay to perform/train image-guided needle interventions)

Objective:

The objective of this project (PERK Station) is to develop a end-to-end solution implemented as a Slicer 3 module to assist in performing/training for image-guided percutaneous needle interventions. The software, along-with its hardware, overlays the image (CT/MR) acquired on the patient/phantom. The physician/trainee looks at the patient/phantom through the mirror showing the image overlay and the CT/MR image appears to be floating inside the body with the correct size and position, as if the physician/trainee had 2D ‘X-ray vision’.

Description:

The PERK Station comprises of image overlay, laser overlay, and standard tracked freehand navigation in a single suite. The end-to-end solution software module along-with its hardware, operates in two modes: a) Clinical b) Training.

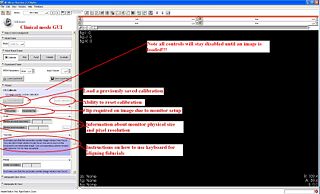

- Clinical mode: In clinical mode, it enables to perform an image-guided percutaneous needle biopsies. The workflow in clinical mode consists of four steps:

- Calibration:

- The objective of this step is to register the image overlay device with patient/phantom lying on scanner table. In this stage, the software sends the image to the secondary device, in correct physical dimensions. The secondary monitor is mounted with a semi-transparent mirror at 45 degree angle. Thus, the image displayed on monitor, gets projected on the mirror, and when seen through the mirror, the image appears to be floating on the patient/phantom. Based on how the secondary monitor is mounted w.r.t mirror, a horizontal or vertical flip may be required. Once correct flip arrangement in chosen, the image as seen on SLICER's slice viewer display should correspond to what is being seen through the mirror. Now the software enables the user who could be physician to translate/rotate the image as seen through the mirror, so that it aligns with the fiducials mounted/strapped on patient/phantom to achieve registration. This fiducial alignment achieves in-plane registration. For registration in z-plane, the image projection plane should be coincident with the laser-guide plane, which is also the plane of acquisition. It is worthwhile to mention that registration of image only takes place on the secondary monitor. Even though, the image is scaled, moved, rotated, and flipped, the image as displayed on the slice viewer of SLICER stays undisturbed. This enables the physician/user to zoom in/out of image in slice viewer for planning, without affecting the calibration. There is an option to save calibration done in a xml file, which is very useful.

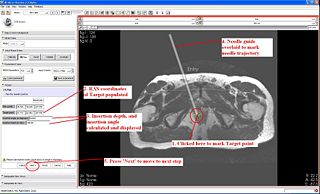

- Planning:

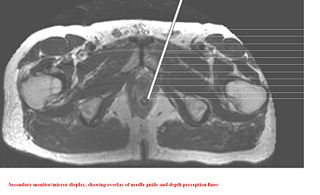

- Once the system is calibrated, and registered with patient, the software moves to next step. In this step, the entry and target points are given by mouse-clicks. The software calculates the insertion angle w.r.t vertical and insertion depth. Also, the software overlays the needle guide on the secondary monitor/mirror to assist the physician/user to perform the intervention. There is an option to reset the plan, in case the physician wishes to perform another needle intervention with same image.

- Insertion:

- After planning, in the insertion step, further depth perception lines appear in gradations of 10mms to help the physician to insert the needle at correct depth.

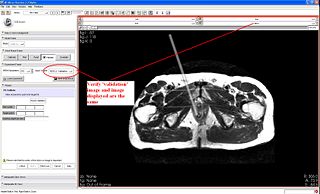

- Validation:

- After the needle insertion is complete, the physician/user acquires a validation image/volume with needle inside patient/phantom. The validation volume/image is added to scene. The physician/user can give the actual needle entry and end points to get error calculations.

- Training mode: In training mode, it provides feedback to trainees in a controlled environment for performing image-guided percutaneous needle interventions. The workflow consists of an additional step of 'Evaluation' in addition to the four steps described earlier. In following description, only the difference are highlighted:

- Calibration:

- In this step, a different wizard GUI is loaded; the software does not automatically display the image in correct dimensions, rather it relies on the student/user's input. The calculation of amount of translation and rotation required to align the system is also left to the user.

- Planning:

- In this step too, the calculation of insertion depth and insertion angle is left to user to input.

- Insertion:

- This step is more or less the same

- Validation:

- This step is more or less the same

- Evaluation:

- In this step, various errors made in calculations are displayed to the student/user to objectively assess his/her performance in the intervention

Progress:

The software is almost complete in its functionality. It is a dynamically loadable module which means one does not need to modify any of the SLICER code to integrate this module. In terms of compliance to SLICER's interactive module architecture, the software code needs to be reviewed by one of engineering core members.

Current deployment/usage:

- Clinical mode: The software has been delivered to team at Johns Hopkins University, Baltimore. It is currently being used in phantom and cadaver trials.

- Training mode: The software and hardware (designed and developed by Paweena U-Thainual and Iulian) integrated system has debuted as a part of Fall course at Queens' University in School of Computing for undergrad teaching taught by Dr Gabor Fichtinger.

Software source code:

Software installation instructions:

- Installing Slicer: go to the Slicer3 Install site.

- Build Module from source code, copy PerkStationModule.dll file generated to directory SlicerInstallationDir/lib/Slicer3/Modules

Tutorial (end-to-end):

- Perk Station 'Clinical' mode: PERK_Station_ClinicalMode_Workflow

Publications

Team

- PI: Gabor Fichtinger, Queen’s University (gabor at cs.queensu.ca)

- Hardware: Paweena U-Thainual, Queen's University(paweena@cs.queensu.ca), Iulian Iordachita, Johns Hopkins University (iordachita@jhu.edu)

- Software Engineer: Siddharth Vikal, Queen’s University (vikal at cs.queensu.ca)

- JHU Software Engineer Support: Csaba Csoma, Johns Hopkins University, csoma at jhu.edu

- NA-MIC Engineering Contact: Katie Hayes, MSc, Brigham and Women's Hospital, hayes at bwh.harvard.edu

- Host Institutes: Queen's University & Johns Hopkins University

Links

Questions? Feedback?

Contact