Difference between revisions of "SCRun on SPL Machines"

| (37 intermediate revisions by the same user not shown) | |||

| Line 2: | Line 2: | ||

---- | ---- | ||

| + | |||

| + | |||

| + | These are the current build instructions for the Fedora Core 5 and Fedora Core 7 SPL machines. | ||

To obtain the working branch utilized in the collaboration you need subversion. To obtain it: | To obtain the working branch utilized in the collaboration you need subversion. To obtain it: | ||

| − | svn co https://code.sci.utah.edu/svn/SCIRun/cibc/trunk | + | svn co https://code.sci.utah.edu/svn/SCIRun/cibc/trunk/SCIRun |

| + | |||

| + | If instead you want a standard release build you can check out one of the repositories listed [https://code.sci.utah.edu/svn/SCIRun/ here] such as: | ||

| − | + | svn co https://code.sci.utah.edu/svn/SCIRun/cibc/branches/3.0.x/SCIRun | |

| − | + | For more general information on SCIRun building visit the [http://software.sci.utah.edu/SCIRunDocs/index.php/CIBC:Documentation:SCIRun:Installation SCIRun Installation Wiki] | |

| − | + | To build cd to trunk/SCIRun and run the build script: | |

| − | + | '''./build.sh -jx''' | |

| − | + | where x is the number of cores you have. The get cmake option may be necessary to build as SCIRun currently requires cmake 2.4.x. If your cmake is not 2.4.x then you can add --get-cmake and create a local version. | |

| + | |||

| + | |||

| + | For our current project we utilize the extra package ModelCreation. If you do not use this package you can skip this step. To enable ModelCreation cd to SCIRun/bin directory: | ||

| + | |||

| + | |||

| + | If you are using 2.4.x cmake/ccmake version on the SPL machines current you can type: | ||

| + | |||

| + | '''ccmake ../src''' | ||

| + | |||

| + | If you are using the version of ccmake created for SCIRun by build.sh with the --get-cmake option then from the /trunk/SCIRun/bin directory type the following: | ||

'''../cmake/local/bin/ccmake ../src''' | '''../cmake/local/bin/ccmake ../src''' | ||

| − | Once in ccmake add ModelCreation to the list of packages | + | |

| + | Once in ccmake add ModelCreation to the list of LOAD_PACKAGE. Arrow down to LOAD_PACKAGE and hit return to allow you to edit the listed packages. Once you have done this type c to configure and g to generate. | ||

| + | |||

| + | |||

| + | [[Image:ccmakeforSCIRun.png]] | ||

| + | |||

| + | |||

| + | Once this has occured it will put you back into the shell in /trunk/SCIRun/bin, then just make: | ||

''' make -jx''' | ''' make -jx''' | ||

| − | When this is completed you currently have to direct SCIRun to the the correct drivers as per this [[discussion]]. | + | When this is completed you currently have to direct SCIRun to the the correct drivers on the SPL machine build of Fedora as per this [[discussion]]. For this we utilize a script written in ksh so you need ksh installed on the system, which all SPL machines should have. If you are on another OS/machine SCIRun might already be setup correctly with regards to OpenGL drivers and you will not need this step. For the SPL Fedora machines cd to the /trunk/SCIRun/bin directory type: |

| + | |||

| + | '''unsetenv LD_LIBRARY_PATH''' | ||

| − | '''find . -name build.make | xargs ~dav/bin/updatefile ~dav/changeit''' | + | '''find . -name build.make | xargs ~dav/bin/updatefile.orig ~dav/changeit''' |

When this is completed you need to run make again in the /trunk/SCIRun/bin directory: | When this is completed you need to run make again in the /trunk/SCIRun/bin directory: | ||

| − | ''' make''' | + | ''' make -jx''' |

| + | |||

| + | You should now have a working version of SCIRun in your /trunk/SCIRun/bin directory. | ||

| + | |||

| + | You may run into this problem repeatedly and should check prior to running SCIRun. To check it, as per discussion above, you can do the following: | ||

| + | |||

| + | For example SCIRun gets pointed at the correct drivers as shown here: | ||

| + | |||

| + | '''spl_tm64_1:/workspace/mjolley/Modeling/trunk/SCIRun/bin% ldd scirun | grep GL | ||

| + | libGL.so.1 => /usr/lib64/nvidia/libGL.so.1 (0x0000003f8e200000) | ||

| + | libGLU.so.1 => /usr/lib64/libGLU.so.1 (0x000000360c200000) | ||

| + | libGLcore.so.1 => /usr/lib64/nvidia/libGLcore.so.1 (0x0000003f80e00000)''' | ||

| + | |||

| + | In contrast SCIRun gets pointed to the wrong, non-hardware accelleration drivers here: | ||

| + | |||

| + | '''spl_tm64_1:/workspace/mjolley/Modeling/trunk/SCIRun/bin% ldd scirun | grep GL | ||

| + | libGL.so.1 => /usr/lib64/libGL.so.1 (0x0000003f84800000) | ||

| + | libGLU.so.1 => /usr/lib64/libGLU.so.1 (0x000000360c200000)''' | ||

| + | |||

| + | Usually if the above script has been run you can get back to the correct drivers by: | ||

| + | |||

| + | '''unsetenv LD_LIBRARY_PATH''' | ||

| + | |||

| + | and then rechecking whether the correct drivers are being utilized. It is a good idea to check this before starting SCIRun. | ||

| + | |||

| − | + | Follow these links for more on the [http://www.sci.utah.edu/cibc/ CIBC] and the [http://www.sci.utah.edu/ Scientific Computing Institute in Utah]. | |

Latest revision as of 03:53, 6 April 2009

Home < SCRun on SPL MachinesBuilding SCIRun on an SPL Machine

These are the current build instructions for the Fedora Core 5 and Fedora Core 7 SPL machines.

To obtain the working branch utilized in the collaboration you need subversion. To obtain it:

svn co https://code.sci.utah.edu/svn/SCIRun/cibc/trunk/SCIRun

If instead you want a standard release build you can check out one of the repositories listed here such as:

svn co https://code.sci.utah.edu/svn/SCIRun/cibc/branches/3.0.x/SCIRun

For more general information on SCIRun building visit the SCIRun Installation Wiki

To build cd to trunk/SCIRun and run the build script:

./build.sh -jx

where x is the number of cores you have. The get cmake option may be necessary to build as SCIRun currently requires cmake 2.4.x. If your cmake is not 2.4.x then you can add --get-cmake and create a local version.

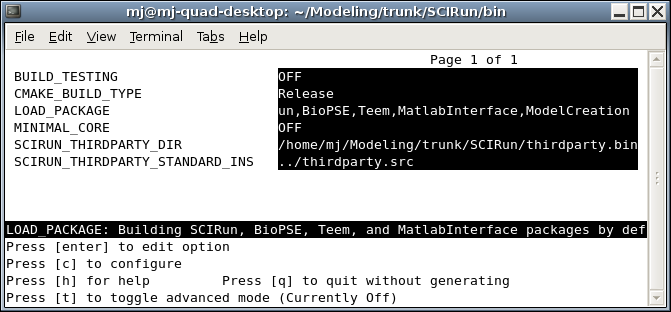

For our current project we utilize the extra package ModelCreation. If you do not use this package you can skip this step. To enable ModelCreation cd to SCIRun/bin directory:

If you are using 2.4.x cmake/ccmake version on the SPL machines current you can type:

ccmake ../src

If you are using the version of ccmake created for SCIRun by build.sh with the --get-cmake option then from the /trunk/SCIRun/bin directory type the following:

../cmake/local/bin/ccmake ../src

Once in ccmake add ModelCreation to the list of LOAD_PACKAGE. Arrow down to LOAD_PACKAGE and hit return to allow you to edit the listed packages. Once you have done this type c to configure and g to generate.

Once this has occured it will put you back into the shell in /trunk/SCIRun/bin, then just make:

make -jx

When this is completed you currently have to direct SCIRun to the the correct drivers on the SPL machine build of Fedora as per this discussion. For this we utilize a script written in ksh so you need ksh installed on the system, which all SPL machines should have. If you are on another OS/machine SCIRun might already be setup correctly with regards to OpenGL drivers and you will not need this step. For the SPL Fedora machines cd to the /trunk/SCIRun/bin directory type:

unsetenv LD_LIBRARY_PATH

find . -name build.make | xargs ~dav/bin/updatefile.orig ~dav/changeit

When this is completed you need to run make again in the /trunk/SCIRun/bin directory:

make -jx

You should now have a working version of SCIRun in your /trunk/SCIRun/bin directory.

You may run into this problem repeatedly and should check prior to running SCIRun. To check it, as per discussion above, you can do the following:

For example SCIRun gets pointed at the correct drivers as shown here:

spl_tm64_1:/workspace/mjolley/Modeling/trunk/SCIRun/bin% ldd scirun | grep GL

libGL.so.1 => /usr/lib64/nvidia/libGL.so.1 (0x0000003f8e200000)

libGLU.so.1 => /usr/lib64/libGLU.so.1 (0x000000360c200000)

libGLcore.so.1 => /usr/lib64/nvidia/libGLcore.so.1 (0x0000003f80e00000)

In contrast SCIRun gets pointed to the wrong, non-hardware accelleration drivers here:

spl_tm64_1:/workspace/mjolley/Modeling/trunk/SCIRun/bin% ldd scirun | grep GL

libGL.so.1 => /usr/lib64/libGL.so.1 (0x0000003f84800000)

libGLU.so.1 => /usr/lib64/libGLU.so.1 (0x000000360c200000)

Usually if the above script has been run you can get back to the correct drivers by:

unsetenv LD_LIBRARY_PATH

and then rechecking whether the correct drivers are being utilized. It is a good idea to check this before starting SCIRun.

Follow these links for more on the CIBC and the Scientific Computing Institute in Utah.