Difference between revisions of "Projects:InteractiveSegmentation"

Ivan.kolesov (talk | contribs) |

Ivan.kolesov (talk | contribs) |

||

| Line 11: | Line 11: | ||

* [[Image:KSliceFlowChart.png | Rel Pred| 800px]] | * [[Image:KSliceFlowChart.png | Rel Pred| 800px]] | ||

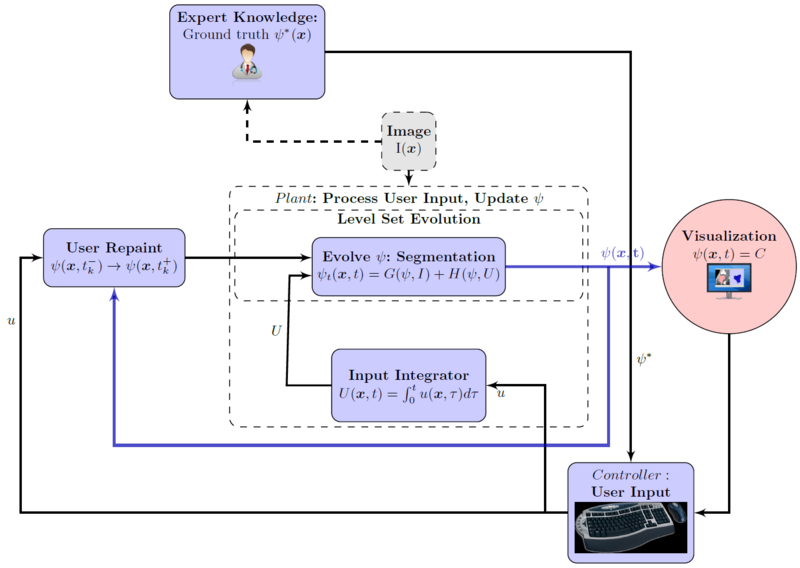

Flowchart for the interactive segmentation approach. Notice the user's pivotal role in the process. | Flowchart for the interactive segmentation approach. Notice the user's pivotal role in the process. | ||

| − | * [[Image:KSliceInptTimeChart.png | Eye Seg| 800px]] | + | * [[Image:KSliceInptTimeChart.png | Eye Seg| 800px]] |

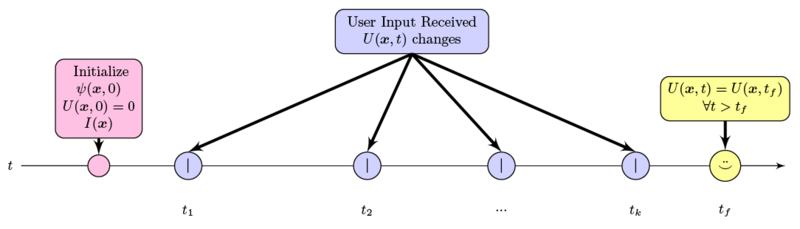

Time-line of user input into the system. Note that user input is sparse, has local effect only, and decreases in frequency and magnitude over time. | Time-line of user input into the system. Note that user input is sparse, has local effect only, and decreases in frequency and magnitude over time. | ||

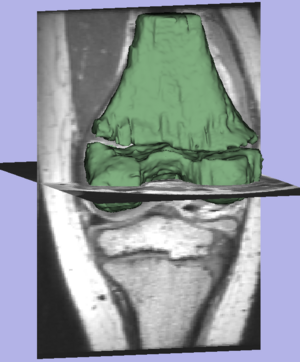

* [[Image:KVoutSegTightMod.png | Eye Seg| 300px]] | * [[Image:KVoutSegTightMod.png | Eye Seg| 300px]] | ||

Revision as of 17:10, 17 October 2011

Home < Projects:InteractiveSegmentationBack to Boston University Algorithms

Interactive Image Segmentation With Active Contours

Proton therapy is used to deliver accurate doses of radiation to people undergoing cancer treatment. At the beginning of treatment, a personalized plan describing the amount of radiation and location to which it must be delivered is created for the patient. However, over the course of the treatment, which lasts weeks, a person's anatomy is likely to change. Adaptive radiotherapy aims to improve fractionated radiotherapy by re-optimizing the radiation treatment plan for each session. To update the plan, the CT images acquired during a treatment session are registered to the treatment plan and doses of radiation to be delivered are re-calculated accordingly. Registering a patient scan to a model (ex.Atlas) provides important prior information for a segmentation algorithm and in the other direction, having segmentation of a structure, can be used for better registration; hence, segmentation and registration must be done concurrently.

Description

Initial experiments show that bony structures such as the mandible can be segmented accurately with a variational active contour. However, for soft tissue such as the brain stem, the intensity profile does not contain sufficient information for reasonably accurate segmentation. To deal with "soft boundaries" infinite dimensional active contours must be constrained by using shape priors and/or interactive user input. One way to constrain a segmentation is shown in our work in MTNS; there, known spatial relationships between structures is exploited. First, a structure we are confident in will be segmented. Using probabilistic PCA a metric used to describe how likely the structure whose segmentation we have obtained is; this metric is essentially a description of how confident we are in the correct segmentation. Then, the location of this structure will be used as prior information(it becomes a landmark) to segment a more difficult structure. Iteratively, the nth structure to be segmented will have n-1 priors do draw information from with a confidence metric for each prior. The likely location of the nth structure, calculated as described above, will serve as an input to constrain an active contours algorithm. Additionally, we have had excellent results when constraining structures of the eye to simple geometrical shapes such as ellipses and tubular to limit the number of free parameter. A sample segmentation of the eye ball is shown below.

Flowchart for the interactive segmentation approach. Notice the user's pivotal role in the process.

Time-line of user input into the system. Note that user input is sparse, has local effect only, and decreases in frequency and magnitude over time.

Result of the segmentation.

Current State of Work

We have gathered a sample data set; currently, we are registering the scan to create a united patient model. This model will localize structures and constrain shape. Additionally, we are investigation interaction approaches for registration and segmentation that will "put the user in the loop" but greatly reduce the work compared to manual approaches.

Key Investigators

- Georgia Tech: Ivan Kolesov, Peter Karasev, Karol Chudy, and Allen Tannenbaum

- Emory University: Grant Muller and John Xerogeanes

Publications

In Press

I. Kolesov, P.Karasev, G.Muller, K.Chudy, J.Xerogeanes, and A. Tannenbaum. Human Supervisory Control Framework for Interactive Medical Image Segmentation. MICCAI Workshop on Computational Biomechanics for Medicine 2011.

P.Karasev, I.Kolesov, K.Chudy, G.Muller, J.Xerogeanes, and A. Tannenbaum. Interactive MRI Segmentation with Controlled Active Vision. IEEE CDC-ECC 2011.