Difference between revisions of "GWE MiniRetreat"

From NAMIC Wiki

| Line 6: | Line 6: | ||

=== Experiment Results Browser === | === Experiment Results Browser === | ||

| − | + | [[Image:GWE-Slicer3 Parameter Explorer Prototype 2009-02-23.png|thumb|right|300px|Screenshot of the prototype implemented in slicer3. The prototype script reads the automatically generated GWE parameter file to determine each valid combination of variables. A preprocessing routine creates images of each volume dataset. Using the sliders, the user can scroll through the preview images in real time. To explore a particular volume in more detail, the user can click the load button to bring the entire volume in to slicer.]] | |

* Brainstorm a way to capture all permutations of a particular experiment to allow a researcher to do some post process with the result = simple CSV file. | * Brainstorm a way to capture all permutations of a particular experiment to allow a researcher to do some post process with the result = simple CSV file. | ||

* Implementation of "CSV dump" feature and integration into the web client (other clients pending). This will be released to the public in the next version. | * Implementation of "CSV dump" feature and integration into the web client (other clients pending). This will be released to the public in the next version. | ||

| Line 43: | Line 43: | ||

} | } | ||

</pre> | </pre> | ||

| − | |||

=== GWE Future and Cloud Computing === | === GWE Future and Cloud Computing === | ||

Revision as of 13:20, 23 February 2009

Home < GWE MiniRetreatMarco Ruiz visited BWH Thorn and Boylston for a few days to address a wide range of GWE related tasks.

Contents

Summary, Accomplishments and Action Items

Work with Steve Pieper

Experiment Results Browser

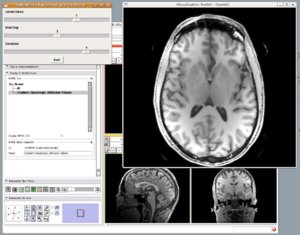

Screenshot of the prototype implemented in slicer3. The prototype script reads the automatically generated GWE parameter file to determine each valid combination of variables. A preprocessing routine creates images of each volume dataset. Using the sliders, the user can scroll through the preview images in real time. To explore a particular volume in more detail, the user can click the load button to bring the entire volume in to slicer.

- Brainstorm a way to capture all permutations of a particular experiment to allow a researcher to do some post process with the result = simple CSV file.

- Implementation of "CSV dump" feature and integration into the web client (other clients pending). This will be released to the public in the next version.

- Setup GWE (new interim version) on Steve's laptop and "john.bwh.harvard.edu" (16 cores machine).

- Run a gradient anisotropic diffusion parameter exploration experiment consisting of 300 runs and generated their corresponding CSV on "john".

${conductance}=$range(0.5,1.5,0.1)

${timeStep}=$range(0.05,0.1,0.01)

${iterations}=$range(1,5,1)

${fileOut}=/workspace/pieper/gwe/unu-test/${SYSTEM.JOB_NUM}.nrrd

${gradient}=$slicer(${SLICER_HOME},GradientAnisotropicDiffusion)

${gradient_CMD} --conductance ${conductance} --timeStep ${timeStep} --iterations ${iterations}

${SLICER_HOME}/share/MRML/Testing/TestData/fixed.nrrd ${fileOut}

- Using the CSV file, Steve generated slices PNG files for the volumes generated.

- Steve prototyped a user friendly browser to allow the user to flip through a set of images and volumes, by selecting parameter combinations using sliders.

- Brainstorm and designed a "experiment results publishing server" as the generalized extension of Steve's prototype to inspect the outputs of a parameter exploration experiment.

- Independent web server application where experiments results (order results) are hosted for the public to browse through them with a similar interactive experience as Steve's prototype.

- GWE clients (terminal, command line, web, gslicer) will support an operation to "upload order results" to a selected "publishing server".

- This application will allow users to flip in real time through the associated data of the jobs that belong to a selected experiment using sliders (all process properties and experiment variables including images generated, execution times, parameters used, standard output files).

- High usage of javascript real time features to leverage user experience.

- Target release: next GWE version - Mid March 09.

- Using "unu" sequence of commands provided by Steve, implemented a useful P2EL macro to easily generate sliced PNG images for volumes (given the plane and elevation), so the user can automatically generate sampling slices as part of their parameter exploration experiments using GWE:

$pngGeneratorCommand($${HOME},$${FILE},$${SLICE_A},$${SLICE_P}) {

$${FILE_EXT}=$fileExt($${FILE})

$${FILE_TMP}=$${FILE}.tmp.$${FILE_EXT}

$${UNU_BASE}=$const(${SLICER_HOME}/Slicer3 --launch unu)

$${UNU_A}=$const($${UNU_BASE} slice -a $${SLICE_A} -p $${SLICE_P} -i $${FILE} -o $${FILE_TMP})

$${UNU_B}=$const($${UNU_BASE} quantize -b 8 -i $${FILE_TMP} -o $${FILE}.png)

$${_}=$const($${UNU_A} && $${UNU_B} && rm $${FILE_TMP})

}

GWE Future and Cloud Computing

- Reviewed the future architecture of GWE to support cloud computing and dispersed compute nodes (not under a cloud or cluster). Two options:

- Hierarchical. Complex design, practically scalable, moderate effort to implement. Subsystems required grid controller (new, complicated) -> relays (new, extremely straight forward) -> daemons (done) -> agents (done).

- Peer-to-peer (daisy chain). Simple design and totally scalable, difficult to implement (opened to countless scenarios were data synchronization errors can occur).

- Hierarchical option chosen for practical reasons.

- Brainstorm the cloud computing direction for GWE:

- New resource manager driver for EC2, configured with EC2 account information, number of nodes and S3 image 'pointer' to load onto the nodes.

- New file driver to support S3 file system and new virtual file system to map to S3 file system for caching and staging files.

- A system to manage a:

- homogeneous environment (cloud) can support 90% of the use cases with low usability complexity and medium development effort.

- heterogeneous environment (grid) can support 100% of the use cases; but increases usability complexity considerably and involves a large development effort. Easily, far more than 10% of users can decide to ignore solution due to non-practical complexity.

- Best to adapt grid (heterogeneous environment) as much as possible to the cloud (homogeneous environment) and not the other way around.

GSlicer3 Future

- No more GWE CLM proxy modules.

- New application settings window to capture GWE configuration (credential, grid, etc).

- Native integration (into Slicer's dynamic UI rendering) the UI capabilities to describe parameter explorations using GWE (P2EL) alongside the currently available single local run:

- CLM UIs will look immediately the same with exception that field labels will have links.

- When user clicks on a field label link a specialized UI to gather arguments for parameter exploration will popup.

Work with Mark Anderson

GWE Overview

- Reviewed GWE related items:

- GSlicer3 concepts and features.

- GWE setup and configuration options.

- P2EL concepts and macros.

- New XNAT macros and their relationships with old OASIS macros.

- GWE project documentation tour.

- New GWE features: credentials encryption, order deletion, order pausing/resuming, explicit resource manager type.

- Collected the following feature requests:

- Resource manager drivers to provide specific information about the managed cluster. In condor's case for example it would show the output of the condor_status command.

- Allocations page on web client.

- Descriptive tooltips for table headers and icons all across the web client.

- Confirmation for deleting an order.

- Allow some form of macro categorization.

- Web editor for gwe-grid.xml and gwe-auth.xml

- Restart/redeploy gwe-daemons button.

- Structured P2EL editor.

EM Segmenter Parameter Exploration Experiment

- Sucessfully run a parameter exploration experiment consisting of 300 gradient anisotropic diffusion processes in parallel on the SPL cluster. Took a couple of minutes to complete them all across 200 nodes.

- Designed, with Steve's help, a MRML generator script to encapsulate the parameters of an EM Segmenter command line run.

- Mark implemented the MRML generator script to set "InputChannelWeights" values. This script is a specific prototype of a more abstract one; which should include the capabilities to set values for selected properties.

- Created the P2EL command to generate MRML files and run EM Segmenter processes using them as inputs:

${weight1.A}=$const(0.9,1.1)

${weight1.B}=$const(0.9,1.1)

${weight2.A}=$const(1,1.1)

${weight2.B}=$const(0.9,1)

${weight3.A}=$const(0.9,1.1)

${weight3.B}=$const(0.9,1.1)

${weight4.A}=$const(1,1.1)

${weight4.B}=$const(1,1.1)

${weight5.A}=$const(0.9,1.1)

${weight5.B}=$const(0.9,0.9)

${weight6.A}=$const(1.1)

${weight6.B}=$const(1.1)

${weight7.A}=$const(0.9,1,1.1)

${weight7.B}=$const(0.9,1,1.1)

${weight8.A}=$const(1)

${weight8.B}=$const(1)

${mrml}=/home/mark/in${SYSTEM.JOB_NUM}.mrml

echo ${SYSTEM.JOB_NUM} ${mrml}

${weight1.A} ${weight1.B} ${weight2.A} ${weight2.B} ${weight3.A} ${weight3.B} ${weight4.A} ${weight4.B}

${weight5.A} ${weight5.B} ${weight6.A} ${weight6.B} ${weight7.A} ${weight7.B} ${weight8.A} ${weight8.B}

&&

cat ${mrml}

&&

/share/apps/slicer3.2/Slicer3-build/lib/Slicer3/Plugins/EMSegmentCommandLine

--intermediateResultsDirectory /share/apps/data/new

--resultVolumeFileName /share/apps/data/new/${SYSTEM.JOB_NUM}

--mrmlSceneFileName ${mrml}

&&

/home/mark/mrmld.sh /home/mark/tutorial/AutomaticSegmentation/atlas/test.mrml ${mrml}

${weight1.A} ${weight1.B} ${weight2.A} ${weight2.B} ${weight3.A} ${weight3.B} ${weight4.A} ${weight4.B}

${weight5.A} ${weight5.B} ${weight6.A} ${weight6.B} ${weight7.A} ${weight7.B} ${weight8.A} ${weight8.B}

IMPORTANT: The original experiment was composed of two P2EL statements. After leaving Boston, it occurred to me that they can be merged back into this documented statement

- Generated 1296 MRL files using GWE on the SPL cluster for 1296 EM Segmenter runs.

- Launched 1296 EM Segmenter runs using GWE and everything worked fine until all the EM Segmenter process running in the cluster nodes were suspended for not apparent reason.

- Research this "sleeping" problem:

- EM Segmenter processes worked fine when launched manually from a terminal.

- EM Segmenter processes went to sleep when launched either directly using condor or through GWE-condor.

- Created a simple 'infinite loop' process to test this symptom.

- Infinite loop process worked fine when launched manually from a terminal.

- Infinite loop process went to sleep when launched either directly using condor or through GWE-condor after 95 seconds.

- Condor proven to be the smocking gun here.

- Mark found a condor configuration value named "suspend" set to 90 seconds and most likely this is the problem. Research still in progress.

Work with Madeleine Seeland

DWI Streamline Tractography Parameter Exploration Experiment

- Worked out GWE issues around setting it up on "paul.bwh.harvard.edu":

- Discovered and fixed a critical bug in GWE password encryption features; which made it impossible to run it on 64 bits machines. My environment is 32 bits so I didn't notice this bug at release time. Need to release this fix asap.

- Created new option for the user to auto-deploy GWE daemons using different types of bundles other than "zip" ("tar.gz", "tar.bz2").

- Research problems deploying the daemon on "paul". It happened that home directories on "paul" had a small disk quota. Need to install daemons on disks with enough space.

- Problems with GWE resource manager auto-discovery. GWE insisted in using QSub driver as resource manager driver in this node. GWE's discovery process is not robust enough and got confused cause there is a "qstat" application installed on "paul" (equal name; but not the same as the "qstat" statistical application for qsub). Explicitly set the resource manager on "paul" as local to bypass auto-discovery.

- Madeleine compiled a new version of Slicer including Luca's python enhancements (to launch modules as command lines) on "paul.bwh.harvard.edu".

- GWE have problems launching modules as command lines:

- Command:

/projects/lmi/people/mseeland/SlicerNew/Slicer3-build/Slicer3

--no_splash --evalpython "from Slicer import slicer;

volNode=Slicer.slicer.VolumesGUI.GetLogic().AddArchetypeVolume(

'/projects/lmi/people/mseeland/Images/NeurosurgicalPlanningTutorialData.zip_FILES/NeurosurgicalPlanningTutorialData/patient_dataset/DTI/epi-header.nhdr','FirstImage',0);

roiNode = Slicer.slicer.VolumesGUI.GetLogic().AddArchetypeVolume(

'/projects/lmi/people/mseeland/Slicer/Slicer3-build/Working.nhdr','ROIImage',1); p = Slicer.Plugin('DWI Streamline Tractography');

nodeType = 'vtkMRMLFiberBundleNode'; outputNode = slicer.MRMLScene.CreateNodeByClass(nodeType); fiberBundleNode=Slicer.slicer.MRMLScene.AddNode(outputNode);

p.Execute(inputVolume=volNode.GetID(),oneBaseline=False, inputROI=roiNode.GetID(),

seedPoint=[[-9.85594, -17.7241, -30.953]], outputFibers=fiberBundleNode.GetID(), tensorType='Two-Tensor', anisotropy='Cl1',

anisotropyThreshold=0.1, stoppingCurvature=0.8, integrationStepLength=0.5, confidence=1, fraction=0.1, minimumLength=40,

maximumLength=800, roiLabel=1, numberOfSeedpointsPerVoxel=1, fiducialRegionSize=1.0, fiducialStepSize =1.0, fiducial=True);

Slicer.slicer.ModelsGUI.GetLogic().SaveModel('/projects/lmi/people/mseeland/Images/testest.vtk',fiberBundleNode)"

- Error:

Tk_Init error: no display name and no $DISPLAY environment variable Error: InitializeTcl failed child process exited abnormally

- I am encountering the same errors launching this module manually; but Madeleine can launch it manually just fine. Wonder if it is some setting she has in her startup environment. Work in progress.