Home < Project Week 25 < Improving Depth Perception in Interventional Augmented Reality Visualization < Sonification

Back to Projects List

Key Investigators

- Simon Drouin (NeuroImaging and Surgical Technologies (NIST) Lab, Canada)

- David Black (University of Bremen; Fraunhofer Institute for Medical Image Computing MEVIS, Bremen, Germany)

- Christian Hansen (University of Magdeburg, Germany)

- Florian Heinrich (University of Magdeburg, Germany)

- André Mewes (University of Magdeburg, Germany)

Project Description

| Objective

|

Approach and Plan

|

Progress and Next Steps

|

- Disuss state of the art of "visual" AR for interventional radiology/surgery

- Find and prototype appropriate auditory display methods for efficient depth perception feedback

- Discover optimal mix between auditory and visual feedback methods for depth perception

|

- Discussion about possibilties to support depth persception (visually and auditory)

- Implementation of new depth cues

|

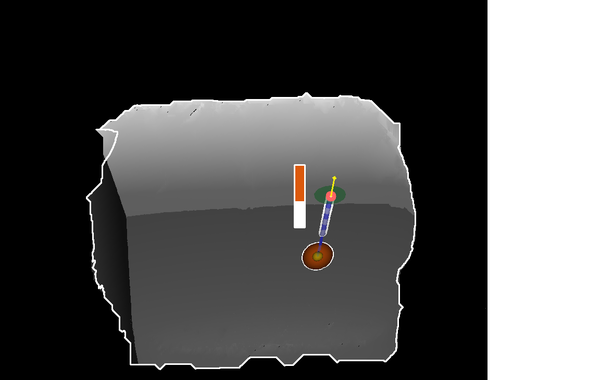

- Enabled kinetic depth cues for Needle Navigation Software: direct control of scene camera movement with head position tracking

- Sound connection could not be implemented during this week (had to rework parts the own code base first, took much time)

- Started to implement head tracking -> need some different hardware

- Next steps will further focus on head tracking and fusion with sonification

|

|

|

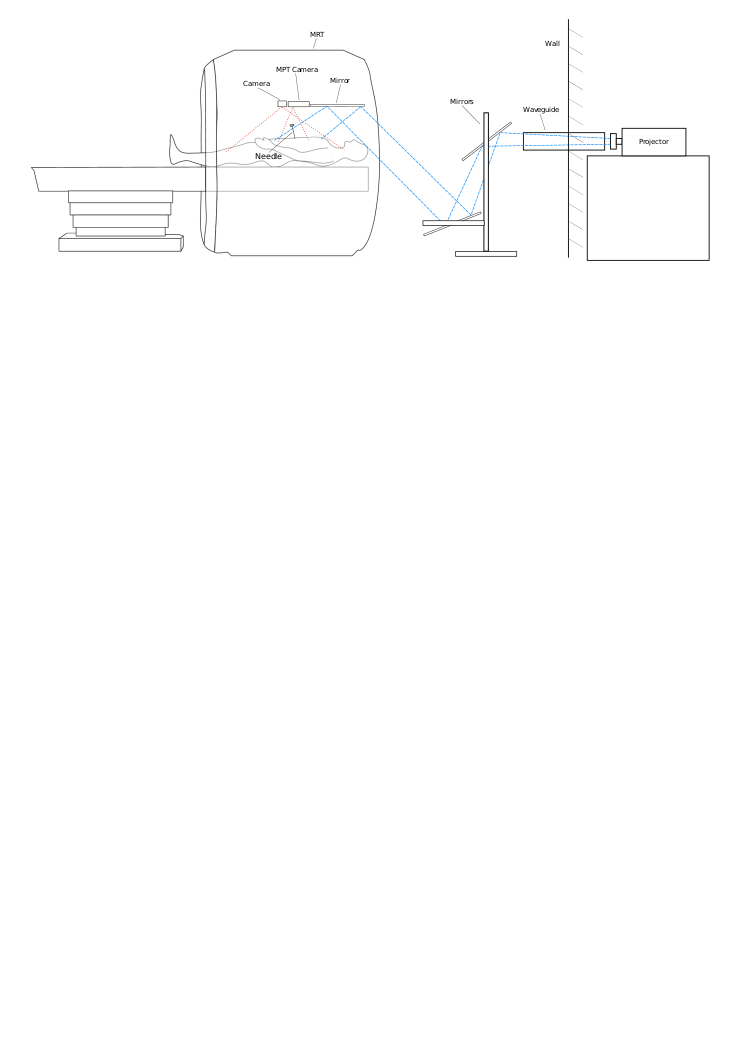

| Depth Cues for projective AR Needle Navigation

|

Background and References

A Survey of Auditory Display in Image-Guided Interventions

Improving spatial perception of vascular models using supporting anchors and illustrative visualization