Difference between revisions of "Project Week 25/Segmentation for improving image registration of preoperative MRI with intraoperative ultrasound images for neuro-navigation"

| (27 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

__NOTOC__ | __NOTOC__ | ||

| − | + | Back to [[Project_Week_25#Projects|Projects List]] | |

| − | |||

| − | |||

==Key Investigators== | ==Key Investigators== | ||

| − | + | *[http://www.mic.uni-bremen.de/jennifer-nitsch/ Jennifer Nitsch] (University of Bremen, Germany) | |

| − | + | *[http://www.mic.uni-bremen.de/cmt-management-team/scheherazade-kras/ Scheherazade Kraß] (University of Bremen, Germany) | |

| − | + | *[http://campar.in.tum.de/Main/JuliaRackerseder Julia Rackerseder] (Technical University of Munich, Germany) | |

| − | |||

| − | |||

==Project Description== | ==Project Description== | ||

| Line 19: | Line 15: | ||

| | | | ||

<!-- Objective bullet points --> | <!-- Objective bullet points --> | ||

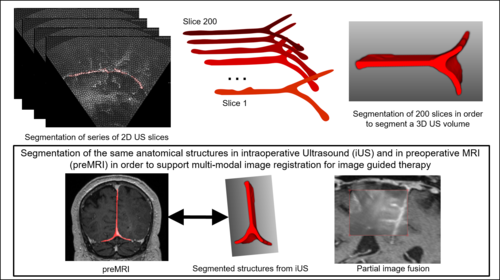

| − | + | *Segmented multiple anatomical structures/landmarks in both MRI and Ultrasound (US) images, using machine learning algorithms (applicability of Deep Learning algorithms is currently tested, DL for US-images, data augmentation...). | |

| + | |||

| + | *The next step would be to analyze the improvement of registration quality with different segmentations/generated landmarks in order to adapt the segmentation algorithms. | ||

| + | |||

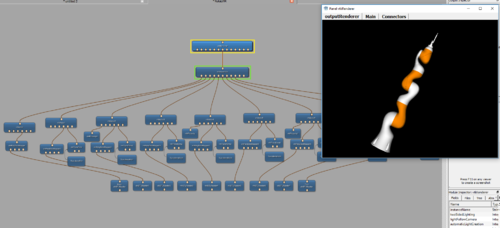

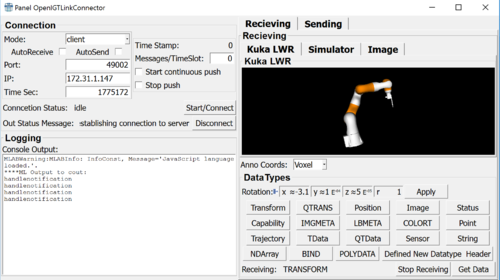

| + | *(Kuka LWR project, Shery) Using advanced segmentation and registration features for iterative robot control. The respective status of the Kuka LWR iiwa (position, configuration) is simulated and visualized as 3-D model in MeVisLab. Medical data sets including target pose (position and path towards it) can be send and received via the OpenIGTLink network protocol as well. | ||

| − | |||

| | | | ||

<!-- Approach and Plan bullet points --> | <!-- Approach and Plan bullet points --> | ||

| − | + | *Starting from multi-modal image segmentation in preopertive MRI and intraoperative Ultrasound images, | |

| − | + | it would be to discuss in which "form" one or multiple segmented structures should influence the registration result. | |

| + | *Get data from public data archive of US and MRI images and segment anatomical structures in both modalities. | ||

| + | |||

| + | *Discussion: Benefit of additional information of multiple segmentations for registration. | ||

| + | |||

| | | | ||

| − | <!-- Progress and Next steps (fill out at the end of project week) | + | <!-- Progress and Next steps (fill out at the end of project week), Please start each sentence in a new line. --> |

| − | Please start each sentence in a new line. | + | *Working with the public RESECT dataset [http://sintef.no/projectweb/usigt-en/data/] |

| + | *Using segmentations to register MRI with US (algorithms are not overfitted on previously used data) | ||

| + | *Locally refine with image based registration | ||

| + | *Plastimatch B-spline deformable registration module works well for image based registration [https://www.slicer.org/wiki/Documentation/4.6/Modules/PlmBSplineDeformableRegistration] | ||

| + | |||

| + | TODO: | ||

| + | *Implement LC2 norm to improve image based registration between US and MRI (Wein, Wolfgang, et al. "Global registration of ultrasound to mri using the LC2 metric for enabling neurosurgical guidance." International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, Berlin, Heidelberg, 2013.) | ||

| + | *Create module to load RESECT dataset including landmarks for ground truth | ||

| + | (Kuka LWR project, Shery) | ||

| + | *1.) Plus setup config file adapted to communicate via OpenIGTLink module in MeVisLab. | ||

| + | *2.) Further development of project controlling the Kuka lwr: New marker-based point selection, that could be used to simulate needle placement in MRI images. | ||

|} | |} | ||

==Illustrations== | ==Illustrations== | ||

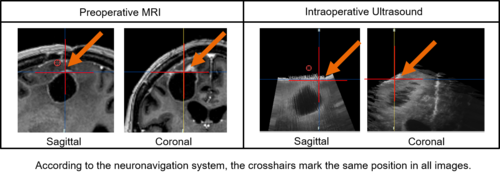

| + | [[image:RegistrationInNeuroNavigationSystem.png|500px]] | ||

| + | |||

| + | Using segmented structures as guiding frame for multi-modal image registration: | ||

| + | [[image:MultimodalImageSegmentation3.png|500px]] | ||

| + | |||

| + | LWR Robot simulation in MeVisLab: | ||

| + | |||

| + | [[image:Picture 2016-12-19 13 55 31.png|500px]] | ||

| + | [[image:Picture 2017-06-26 11 04 59.png|500px]] | ||

==Background and References== | ==Background and References== | ||

<!-- Use this space for information that may help people better understand your project, like links to papers, source code, or data --> | <!-- Use this space for information that may help people better understand your project, like links to papers, source code, or data --> | ||

| + | In glioma surgery neuronavigation systems assist in determining the tumor's location and estimating its extent. However, the intraoperative situation diverges seriously from the preoperative situation in the MRI scan | ||

| + | displayed on the navigation system. The movement of brain tissue during surgery, i.e., caused by brainshift | ||

| + | and tissue removal, must be considered mentally by the surgeon. A task that gets more challenging in later | ||

| + | phases of the tumor resection. | ||

| + | |||

| + | Besides, it is an exhaustive issue and the shift of cerebral structures must be | ||

| + | expected being non-uniform and that it implies a deformation of the image data. This makes it especially hard | ||

| + | to mentally predict and model. | ||

| + | |||

| + | Thus, intraoperative imaging modalities are used to visualize the current intraoperative situation. IUS, | ||

| + | for instance, is easy to use intraoperatively, offers real-time information, is widely available at low cost and | ||

| + | causes no radiation. These are important advantages when iUS is compared with iCT or iMRI. However, in | ||

| + | image-guided surgery precise image registration of iUS and preMRI and the thereon-based image fusion is still | ||

| + | an unsolved problem. The different representations of cerebral structures in both modalities as well as | ||

| + | artifacts within the iUS, hinder direct fusion of both modalities. | ||

Latest revision as of 09:56, 30 June 2017

Home < Project Week 25 < Segmentation for improving image registration of preoperative MRI with intraoperative ultrasound images for neuro-navigationBack to Projects List

Key Investigators

- Jennifer Nitsch (University of Bremen, Germany)

- Scheherazade Kraß (University of Bremen, Germany)

- Julia Rackerseder (Technical University of Munich, Germany)

Project Description

| Objective | Approach and Plan | Progress and Next Steps |

|---|---|---|

|

it would be to discuss in which "form" one or multiple segmented structures should influence the registration result.

|

TODO:

(Kuka LWR project, Shery)

|

Illustrations

Using segmented structures as guiding frame for multi-modal image registration:

LWR Robot simulation in MeVisLab:

Background and References

In glioma surgery neuronavigation systems assist in determining the tumor's location and estimating its extent. However, the intraoperative situation diverges seriously from the preoperative situation in the MRI scan displayed on the navigation system. The movement of brain tissue during surgery, i.e., caused by brainshift and tissue removal, must be considered mentally by the surgeon. A task that gets more challenging in later phases of the tumor resection.

Besides, it is an exhaustive issue and the shift of cerebral structures must be expected being non-uniform and that it implies a deformation of the image data. This makes it especially hard to mentally predict and model.

Thus, intraoperative imaging modalities are used to visualize the current intraoperative situation. IUS, for instance, is easy to use intraoperatively, offers real-time information, is widely available at low cost and causes no radiation. These are important advantages when iUS is compared with iCT or iMRI. However, in image-guided surgery precise image registration of iUS and preMRI and the thereon-based image fusion is still an unsolved problem. The different representations of cerebral structures in both modalities as well as artifacts within the iUS, hinder direct fusion of both modalities.