Difference between revisions of "2011 Winter Project Week:Breakout Multi-Image Engineering"

| (21 intermediate revisions by 5 users not shown) | |||

| Line 6: | Line 6: | ||

Wednesday 8-10am | Wednesday 8-10am | ||

| − | Session Leaders: | + | Session Leaders: Jim Miller, Steve Pieper, Alex Yarmakovich, Junichi Tokuda, Demian Wasserman |

| + | |||

| + | =Background= | ||

| + | Increasingly, we have data in in individual subjects in slicer which are multi something. Examples are: | ||

| + | *multi-channel (T1, T2, flair, dual echo, etc.) | ||

| + | *DWI | ||

| + | *rgb and other multi-channel images | ||

| + | *time series, including DCE, cardiac cycle, follow-up studies in cancer, multiple intra-procedural volumes (with potential translation, rotation, deformation) | ||

| + | *multiple subjects for atlas formation / population studies ? | ||

| + | *results from processing using different parameters ? | ||

| + | |||

| + | =Current State= | ||

| + | We have parts of the ability to handle such multi-dimensional volume in the DWI and 4D infrastructures: | ||

| + | ==I/O== | ||

| + | *Dicom to nrrd | ||

| + | *TimeSeriesBundleNode is a mrml node to organize 4D time series data. | ||

| + | ==Display== | ||

| + | *In the volumes module, a slider allows to look at any of the individual volumes in DWI data set. | ||

| + | *In the 4D display module, a movie player like capability exists | ||

| + | *The dti infrastructure allows the display of derived scalar values such as FA and color by orientation | ||

| + | *Add an interface to break a multivolume into its elements and to assemble the elements into a multivolume. Equivalent, but more constrained capabilies have been recently added to the editor module. | ||

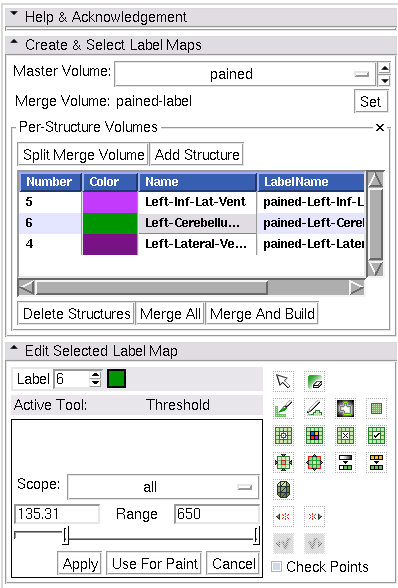

| + | [[image:Editor3.6-interface.png|right|Example of interface for breaking and assembling a multivolume]] | ||

| + | |||

| + | ==Existing Processing capabilities== | ||

| + | Multi volumes are processed in Slicer in specialized ways. | ||

| + | *Parametric analysis: | ||

| + | **DTI estimation from DWI | ||

| + | **Tofts parameter estimation from DCE | ||

| + | *Segmentation: | ||

| + | **EM segmentation from multi-channel morphology data | ||

| + | *Registration | ||

| + | **Brainsfit has in principle the ability to register multi data sets to each other and Gtract has a first solution which is engineered for DWI | ||

| + | =The Need= | ||

| + | All of the current examples are special cases. If we can generalize those into a single architecture for multi data, there would be a lot of potential for cross-benefits. We need a common engineering and UI philosophy for everybody who is working on these topics. | ||

| + | Not just images but many (all?) MRML node types should support temporal or other sequences, such as: transform, model, fiducial | ||

| + | ==I/O== | ||

| + | *We should have a single module to organize the data. DICOM to nrrd is a good start, but we need to be able to handle separate T1 and T2 acquisitions as well. We also need to be able to handle non-dicom data. Perhaps something like: load all data into slicer and associate them inside slicer in a special module. Write out as a single nrrd file. | ||

| + | *Non-image nodes? | ||

| + | *May point to data on disk without immediately loading it, e.g., description of sequences/sets of images to be processed | ||

| + | *Support sharing: should be able to maintain the grouping of data for distribution to others (packaging, not just IO) | ||

| + | **Unit = collection of MRML files? (e.g., an xcat file is a standard format to describe results of a database search - supported by XNAT and MIDAS) | ||

| + | |||

| + | ==Display== | ||

| + | *We should create a single visualization infrastructure to handle multi data: compare viewers, rgb channels, time series movies: equivalent slice viewers and 3D viewers | ||

| + | *Compareview should allow to show multi data in compareviewers. When we have a few volumes, then we can display all. If its a large number of volumes, we will only be able to display a few. Some engineering work will be needed to make this easy on the user. | ||

| + | |||

| + | ==Processing== | ||

| + | *Common api for processing: EM segmentation, pharmacokinetic models, DWI filtering, tensor estimation should all plug into the data in the same way. | ||

| + | *Registration: where does it fit? should we provide capability to include transformation assigned to each timepoint/channel? rigid/non-rigid? If yes, probably we should allow to access the original data, probably storing resampled data only is not a good option. | ||

| + | **Generalize eddy current correction for general purpose multi-data fusion? Same subject affine, multiple points. Add N4 bias correction? | ||

Latest revision as of 17:25, 10 January 2011

Home < 2011 Winter Project Week:Breakout Multi-Image EngineeringBack to Project Week Agenda

Agenda breakout session: Multi-Image Engineering in slicer

Wednesday 8-10am

Session Leaders: Jim Miller, Steve Pieper, Alex Yarmakovich, Junichi Tokuda, Demian Wasserman

Contents

Background

Increasingly, we have data in in individual subjects in slicer which are multi something. Examples are:

- multi-channel (T1, T2, flair, dual echo, etc.)

- DWI

- rgb and other multi-channel images

- time series, including DCE, cardiac cycle, follow-up studies in cancer, multiple intra-procedural volumes (with potential translation, rotation, deformation)

- multiple subjects for atlas formation / population studies ?

- results from processing using different parameters ?

Current State

We have parts of the ability to handle such multi-dimensional volume in the DWI and 4D infrastructures:

I/O

- Dicom to nrrd

- TimeSeriesBundleNode is a mrml node to organize 4D time series data.

Display

- In the volumes module, a slider allows to look at any of the individual volumes in DWI data set.

- In the 4D display module, a movie player like capability exists

- The dti infrastructure allows the display of derived scalar values such as FA and color by orientation

- Add an interface to break a multivolume into its elements and to assemble the elements into a multivolume. Equivalent, but more constrained capabilies have been recently added to the editor module.

Existing Processing capabilities

Multi volumes are processed in Slicer in specialized ways.

- Parametric analysis:

- DTI estimation from DWI

- Tofts parameter estimation from DCE

- Segmentation:

- EM segmentation from multi-channel morphology data

- Registration

- Brainsfit has in principle the ability to register multi data sets to each other and Gtract has a first solution which is engineered for DWI

The Need

All of the current examples are special cases. If we can generalize those into a single architecture for multi data, there would be a lot of potential for cross-benefits. We need a common engineering and UI philosophy for everybody who is working on these topics. Not just images but many (all?) MRML node types should support temporal or other sequences, such as: transform, model, fiducial

I/O

- We should have a single module to organize the data. DICOM to nrrd is a good start, but we need to be able to handle separate T1 and T2 acquisitions as well. We also need to be able to handle non-dicom data. Perhaps something like: load all data into slicer and associate them inside slicer in a special module. Write out as a single nrrd file.

- Non-image nodes?

- May point to data on disk without immediately loading it, e.g., description of sequences/sets of images to be processed

- Support sharing: should be able to maintain the grouping of data for distribution to others (packaging, not just IO)

- Unit = collection of MRML files? (e.g., an xcat file is a standard format to describe results of a database search - supported by XNAT and MIDAS)

Display

- We should create a single visualization infrastructure to handle multi data: compare viewers, rgb channels, time series movies: equivalent slice viewers and 3D viewers

- Compareview should allow to show multi data in compareviewers. When we have a few volumes, then we can display all. If its a large number of volumes, we will only be able to display a few. Some engineering work will be needed to make this easy on the user.

Processing

- Common api for processing: EM segmentation, pharmacokinetic models, DWI filtering, tensor estimation should all plug into the data in the same way.

- Registration: where does it fit? should we provide capability to include transformation assigned to each timepoint/channel? rigid/non-rigid? If yes, probably we should allow to access the original data, probably storing resampled data only is not a good option.

- Generalize eddy current correction for general purpose multi-data fusion? Same subject affine, multiple points. Add N4 bias correction?