Difference between revisions of "2013 Project Week:SteeredRegistration"

Dirkpadfield (talk | contribs) |

Dirkpadfield (talk | contribs) |

||

| (28 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

__NOTOC__ | __NOTOC__ | ||

<gallery> | <gallery> | ||

| − | Image:PW- | + | Image:PW-MIT2013.png|[[2013_Winter_Project_Week#Projects|Projects List]] |

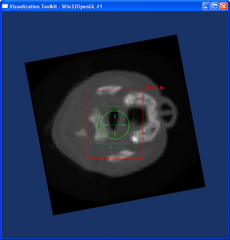

Image:InteractiveReg-translation.png|Interaction: Translate | Image:InteractiveReg-translation.png|Interaction: Translate | ||

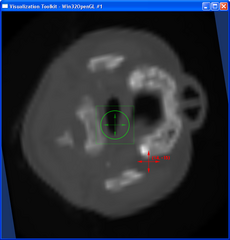

Image:InteractiveReg-rotation.png|Interaction: Rotate | Image:InteractiveReg-rotation.png|Interaction: Rotate | ||

| Line 8: | Line 8: | ||

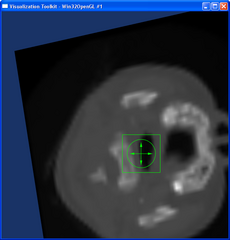

Image:translate-after.png|After translation from user input | Image:translate-after.png|After translation from user input | ||

</gallery> | </gallery> | ||

| − | |||

| − | |||

==Key Investigators== | ==Key Investigators== | ||

| − | * | + | * GRC: Dirk Padfield, Jim Miller |

| − | |||

* Isomics: Steve Pieper | * Isomics: Steve Pieper | ||

| − | * | + | * BWH: Tina Kapur, Ron Kikinis |

| − | |||

<div style="margin: 20px;"> | <div style="margin: 20px;"> | ||

| Line 22: | Line 18: | ||

<h3>Objective</h3> | <h3>Objective</h3> | ||

| − | We are developing methods to perform interactive registration in 3D Slicer. The final goal is to provide user an interface to interact with the registration program during the matching progress | + | We are developing methods to perform interactive registration in 3D Slicer. The final goal is to provide the user an interface to interact with the registration program during the matching progress. This will enable the user to run the automated registration and to correct it real-time through gestures. |

| − | |||

| − | |||

| − | |||

| Line 34: | Line 27: | ||

<h3>Approach, Plan</h3> | <h3>Approach, Plan</h3> | ||

| − | Our approach for developing interactive registration module is to first construct the loadable extension to perform intensity-based registration, display the intermediate results, and allow user to | + | Our approach for developing the interactive registration module is to first construct the loadable extension to perform intensity-based registration, display the intermediate results, and allow the user to correct the progress at any point if the intermediate results are visually unsatisfactory. Providing translation, rotation, and scale for rigid registration and perhaps landmarks or directional markers for deformable registration will be enabled by mouse click and drag gestures. These will be used to correct/update the current transformation, which will then be fed back as the update to the automated registration algorithm. The goal is to have short delays in the user interaction by continually running short automated registration iterations followed by any user interactions waiting in the event cue. As a test the user could drag the images apart, watch them become aligned again, and then drag them apart again. The module is particuarly useful when the registration algorithm goes astray since the user can then quickly bring the images back into approximate alignment and let the automated algorithm compute the fine registration. |

</div> | </div> | ||

| Line 41: | Line 34: | ||

<h3>Progress</h3> | <h3>Progress</h3> | ||

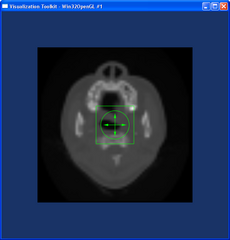

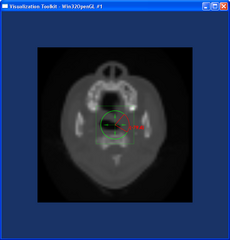

| − | + | So far, we have implemented user interaction for translation, rotation, and scale. To avoid having to move back and forth from the image to the GUI in order to switch between translation, rotation, and scale, we tested a vtkAffineWidget that represents these interactions in a widget that feeds back to the images (see figures). We also looked at the interactive registration available in Slicer2 to see which parts of that framework could be replicated in Slicer4. In the next step, we plan to have a first version of the interaction loop that integrates the automated registration with the manual feedback. | |

| + | |||

| + | </div> | ||

| + | </div> | ||

| + | |||

| + | <gallery widths=240px heights=240px caption="vtkAffineWidget"> | ||

| + | Image:AffineWidgetStart.bmp | Affine widget | ||

| + | Image:AffineWidgetRotate.bmp | Rotation | ||

| + | Image:AffineWidgetScale.bmp | Scaling | ||

| + | Image:AffineWidgetTranslate.bmp | Translation | ||

| + | Image:AffineWidgetEnd.bmp | End | ||

| + | </gallery> | ||

| + | |||

| + | <gallery widths=500px heights=500px caption="Previous Interactive Registration"> | ||

| + | Image:InteractiveRegistration.png | Previous interaction | ||

| + | </gallery> | ||

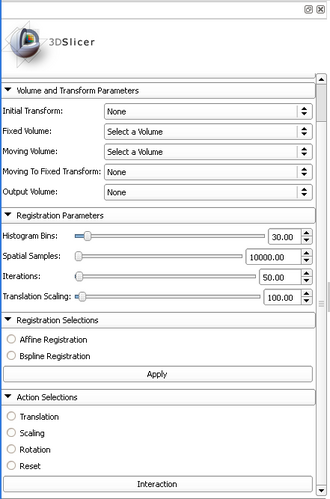

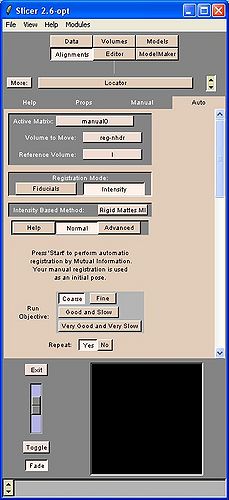

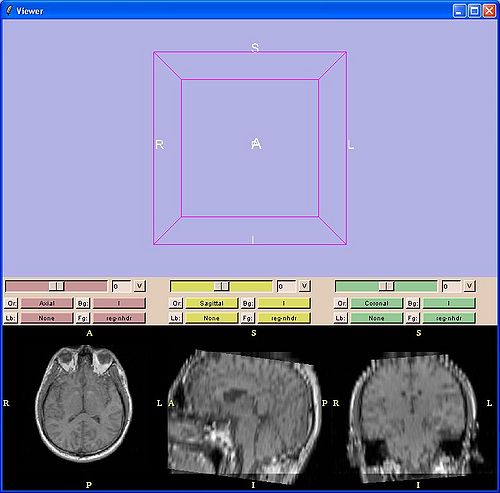

| − | < | + | <gallery widths=500px heights=500px caption="Slicer2 Interactive Registration"> |

| − | </ | + | Image:Slicer2InteractiveRegistration1.jpg | Interaction GUI |

| + | Image:Slicer2InteractiveRegistration2.jpg | Viewer | ||

| + | </gallery> | ||

==Delivery Mechanism== | ==Delivery Mechanism== | ||

Latest revision as of 17:12, 11 January 2013

Home < 2013 Project Week:SteeredRegistrationKey Investigators

- GRC: Dirk Padfield, Jim Miller

- Isomics: Steve Pieper

- BWH: Tina Kapur, Ron Kikinis

Objective

We are developing methods to perform interactive registration in 3D Slicer. The final goal is to provide the user an interface to interact with the registration program during the matching progress. This will enable the user to run the automated registration and to correct it real-time through gestures.

Approach, Plan

Our approach for developing the interactive registration module is to first construct the loadable extension to perform intensity-based registration, display the intermediate results, and allow the user to correct the progress at any point if the intermediate results are visually unsatisfactory. Providing translation, rotation, and scale for rigid registration and perhaps landmarks or directional markers for deformable registration will be enabled by mouse click and drag gestures. These will be used to correct/update the current transformation, which will then be fed back as the update to the automated registration algorithm. The goal is to have short delays in the user interaction by continually running short automated registration iterations followed by any user interactions waiting in the event cue. As a test the user could drag the images apart, watch them become aligned again, and then drag them apart again. The module is particuarly useful when the registration algorithm goes astray since the user can then quickly bring the images back into approximate alignment and let the automated algorithm compute the fine registration.

Progress

So far, we have implemented user interaction for translation, rotation, and scale. To avoid having to move back and forth from the image to the GUI in order to switch between translation, rotation, and scale, we tested a vtkAffineWidget that represents these interactions in a widget that feeds back to the images (see figures). We also looked at the interactive registration available in Slicer2 to see which parts of that framework could be replicated in Slicer4. In the next step, we plan to have a first version of the interaction loop that integrates the automated registration with the manual feedback.

- vtkAffineWidget

- Previous Interactive Registration

- Slicer2 Interactive Registration

Delivery Mechanism

This work will be delivered to the NA-MIC Kit as a (please select the appropriate options by noting YES against them below)

- ITK Module

- Slicer Module

- Built-in

- Extension -- commandline

- Extension -- loadable YES

- Other (Please specify)