Difference between revisions of "Projects:PathologyAnalysis"

| Line 8: | Line 8: | ||

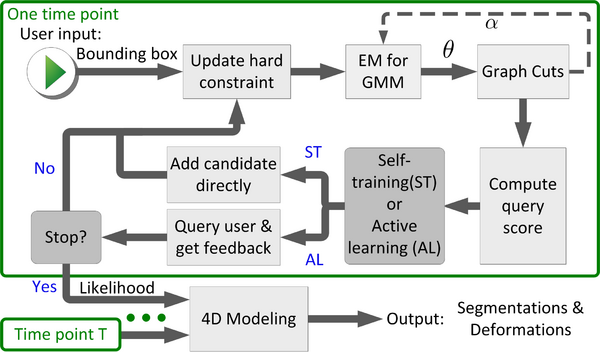

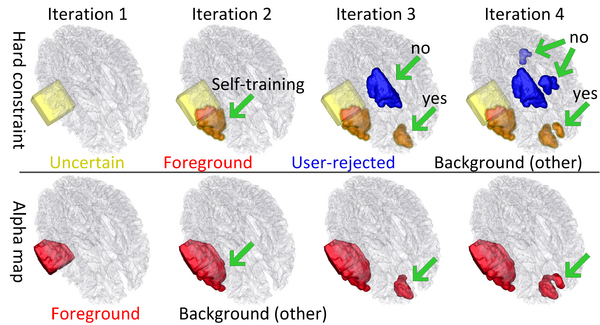

We propose a novel semi-supervised method, called 4D active cut for lesion recognition and deformation estimation. Existing interactive segmentation methods passively wait for user to refine the segmentations which is a difficult task in 3D images that change over time. 4D active cut instead actively selects candidate regions for querying the user, and therefore obtains the most informative user feedback. A user simply answers `yes' or `no' to a candidate object without having to refine the segmentation slice by slice. Compared to single-object detection of GrabCut, our method also detects multiple lesions with spatial coherence using Markov random fields constraints. | We propose a novel semi-supervised method, called 4D active cut for lesion recognition and deformation estimation. Existing interactive segmentation methods passively wait for user to refine the segmentations which is a difficult task in 3D images that change over time. 4D active cut instead actively selects candidate regions for querying the user, and therefore obtains the most informative user feedback. A user simply answers `yes' or `no' to a candidate object without having to refine the segmentation slice by slice. Compared to single-object detection of GrabCut, our method also detects multiple lesions with spatial coherence using Markov random fields constraints. | ||

| − | [[File: | + | [[File:Flowchart_4D_active_cut.png|600px|center|thumb|Flowchart of 4D active cut framework.]] |

| + | |||

| + | The following figure shows the dynamic learning process of the proposed framework applied to one subject. | ||

| + | |||

| + | [[File:Overview_4D_active_cut.png|600px|center|thumb|llustration of the iterative process of 4D active cut on one subject. Iteration 1: User-initialized bounding box. Iteration 2: Self training. Iteration 3 and 4: Active learning. The user stopped the process at 5th iteration. Arrows point to the changes between iterations. The white matter surface is visualized in all figures as reference.]] | ||

== Modeling 4D Changes in Pathological Anatomy == | == Modeling 4D Changes in Pathological Anatomy == | ||

Revision as of 21:32, 11 October 2014

Home < Projects:PathologyAnalysisBack to Utah 2 Algorithms

Ongoing Work

4D Active Cut: An Interactive Tool for Pathological Anatomy Modeling

We propose a novel semi-supervised method, called 4D active cut for lesion recognition and deformation estimation. Existing interactive segmentation methods passively wait for user to refine the segmentations which is a difficult task in 3D images that change over time. 4D active cut instead actively selects candidate regions for querying the user, and therefore obtains the most informative user feedback. A user simply answers `yes' or `no' to a candidate object without having to refine the segmentation slice by slice. Compared to single-object detection of GrabCut, our method also detects multiple lesions with spatial coherence using Markov random fields constraints.

The following figure shows the dynamic learning process of the proposed framework applied to one subject.

Modeling 4D Changes in Pathological Anatomy

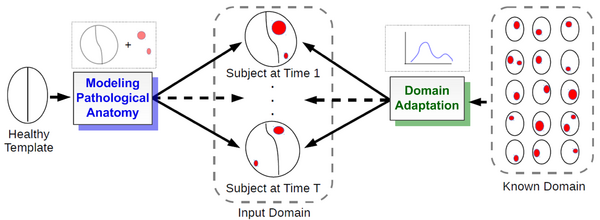

We propose a novel framework that models 4D changes in pathological anatomy across time, and provides explicit mapping from a healthy template to subjects with pathology. Moreover, our framework uses transfer learning to leverage rich information from a known source domain, where we have a collection of completely segmented images, to yield effective appearance models for the input target domain. The automatic 4D segmentation method uses a novel domain adaptation technique for generative kernel density models to transfer information between different domains, resulting in a fully automatic method that requires no user interaction.

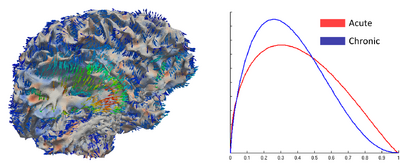

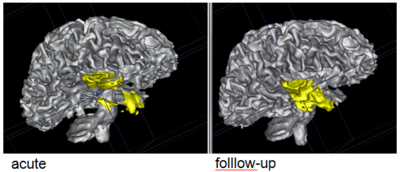

Analyzing imaging biomarkers for traumatic brain injury using 4D modeling of longitudinal MRI

We present a new method for computing surface-based and voxel-based imaging biomarkers using 4D modeling of longitudinal MRI. We analyze the potential for clinical use of these biomarkers by correlating them with TBI-specific patient scores. Our preliminary results indicate that the proposed voxel-based biomarkers are correlated with clinical outcomes [1].

Segmentation of Serial MRI of TBI patients using Personalized Atlas Construction and Topological Change Estimation

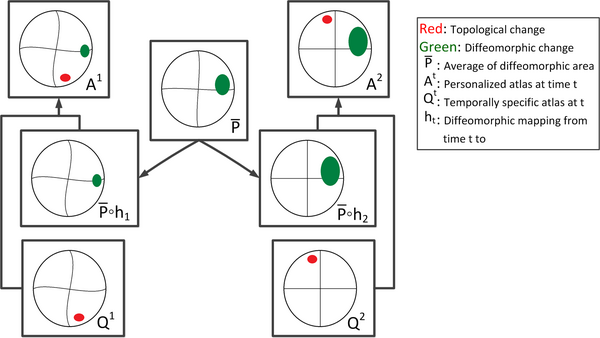

Longitudinal images with TBI present topological changes over time due to the effect of the impact force on tissue, skull, and blood vessels and the recovery process. We address this issue by defining a novel atlas construction scheme that explicitly models the effect of topological changes [2]. Our method automatically estimates the probability of topological changes jointly with the personalized atlas. The following figure shows our atlas construction scheme.

Our method combines 4D information through the creation of personalized atlas that explicitly handles diffeomorphic and nondiffeomorphic temporal changes. The method is robust to topological changes caused by the injury and the recovery process in TBI.

Patient-Specific Segmentation Framework for Longitudinal MR Images of Traumatic Brain Injury

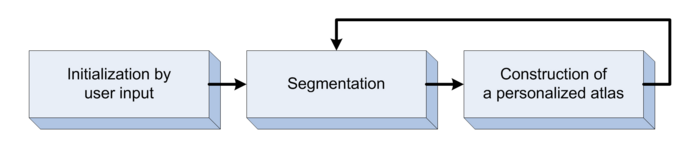

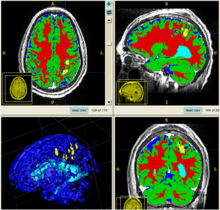

We propose a multi-modal image segmentation framework for longitudinal TBI images [3]. The framework is initialized through manual input of primary lesion sites at each time point, which are then refined by a joint approach composed of Bayesian segmentation and construction of a personalized atlas. The personalized atlas construction estimates the average of the posteriors of the Bayesian segmentation at each time point and warps the average back to each time point to provide the updated priors for Bayesian segmentation. The difference between our approach and segmenting longitudinal images independently is that we use the information from all time points to improve the segmentations. Given a manual initialization, our framework automatically segments healthy structures (white matter, grey matter, cerebrospinal fluid) as well as different lesions such as hemorrhagic lesions and edema. Our framework can handle different sets of modalities at each time point, which provides flexibility in analyzing clinical scans.

Segmentation framework

The segmentation framework is initialized through manual input of primary lesion sites at each time point, which are then refined by a joint approach composed of Bayesian segmentation and construction of a personalized atlas. The following figure is the segmentation framework for multi-modal MR images.

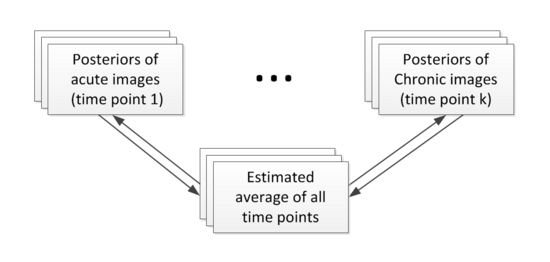

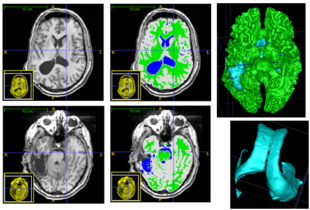

Construction of personalized atlas

Based on the segmentation results (the posteriors), we create personalized atlases using the unbiased diffeomorphic atlas construction method. In atlas construction, we estimate an average that requires the minimum amount of deformation to transform into the posteriors at every time point. The following flowchart shows the estimation of personalized atlases.

Results

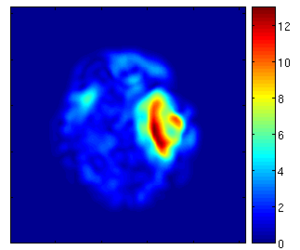

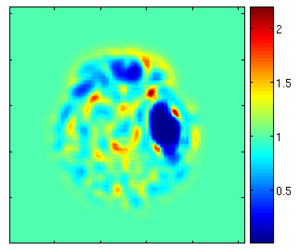

Using the deformation field got from the estimation of personalized atlases, we can visualize the tissue deformation. In the following two figures, the deformation field is visualized as vector magnitude (left) and Jacobian (right).

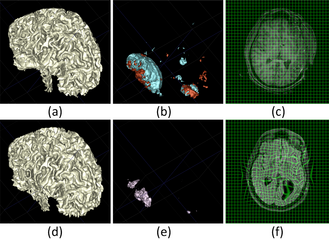

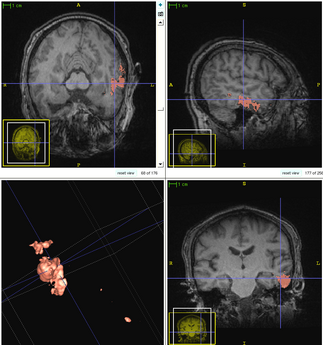

The following two figures show the snapshot of the segmentation result.

Former Work

Whereas methodologies for segmentation of normal anatomical structures and tissue types has become standard and highly automated, tools for efficient and reliable segmentation of pathology lag behind. Major efforts on segmentation of lesions mostly focus on wm lesions as in multiple sclerosis and tumor/edema segmentation.

Driven by challenging image analysis problems of the NA-MIC DBP partner UCLA on traumatic brain injury (TBI), we will develop image segmentation methodology that helps clinical researcher to characterize and quantify a variety of different cerebral lesion types. Standard automated image analysis methods are not robust with respect to the TBI-related changes in image contrast, changes in brain shape, cranial fractures, lesions, white matter fiber alterations, and other signatures of head injury.

We are working on an extension of the "atlas-based classification" method ABC for TBI datasets with the clinical goal to efficiently segment healthy brain tissue and cerebral lesions. A main goal will be the automated segmentation of healthy brain tissue and user-assisted segmentation of various cerebral lesion types (hematoma, subarachnoid hemorrhage, contusion and DAI, perifocal (regional) to diffuse (generalized) edema, hemorrhagic diffuse axonal injury (DAI)and more. A strong emphasis will be on the joint analysis of multiple imaging modalities (T1 pre- and T1 postcontrast, T2 (TSE), FLAIR, GRE, SWI, Perfusion, and DTI/DWI) for improved detection and quantitative characterization of lesion types.

Initial experiments

Experiments: Multi-modal registration and tissue segmentation

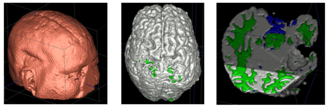

We conducted experiments with the application of the ABC tool to multi-modal image data of 5 TBI cases provided by DBP partner UCLA. The tool includes co-registration of multiple modalities via mutual-information linear registration, and a nonlinear registration (high-deformable fluid registration) of a probabilistic normative atlas for segmentation of healthy tissue. The following results show feasibility of multi-modal registration and segmentation of normal tissue. Pathology is segmented via postprocessing using 3D user-supervised level-set evolution.

Current work, jointly with the UCLA DBP partner, centers about a clinical definition of the most common lesion types and a multi-modality MRI characterization definition of these patterns. These characterizations will be used for the development of a segmentation methodology of the broad range of cerebral lesion types based on a user-guided definition of such patterns in current TBI cases.

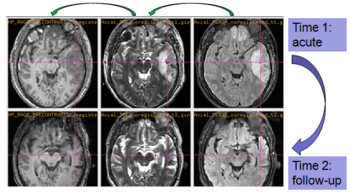

Experiments: Registration of longitudinal data

The NA-MIC DBP project on TBI analysis (UCLA partner) includes serial multi-modal MRI at acute phase and follow-up after six months. We test mutual-information-based linear registration of multi-modal MRI data within each time point and nonlinear registration (b-spline) of follow-up scans to obtain sets of images mapped into the same coordinate system. Preliminary results demonstrate the large deformations due to mass effect of a large lesion but also significant large regional changes of multi-modal MRI contrast from actute to follow-up.

Conclusion

In this work, we will develop new methodologies and tools for user-guided segmentation of cerebral lesions in multi-modal brain MRI. Driving application is TBI imaging research as conducted by the NA-MIC DBP partner UCLA. Components to be included will be robust clustering as presented in [6], initialization of multi-modal intensity patterns via prior knowledge [6], and evaluation of generative models as presented in [4] and [5]. Developments will also include efficient user-guidance for localization and characterization of subject-specific lesion patterns, and tools to provide quantitative measurements and indices of spatial extent of pathologies currently used in clinical assessment. We will evaluate extension of the commonly used indices derived from 2D images to 3D shape features.

Collaboration events

Telephone meeting (April 1st, 2013)

- Development of the multimodal registration and segmentation software.

- Journal article submission of current method.

- Grant reviews.

- Schedule of Andrei and Micah's visit.

(NA-MIC) All hands meeting (AHM) and Winter Project Week 2013 (January 7-11)

We explored the possibilities of combining structural and DTI information for assessment of recovery and treatment efficacy for TBI. We tried affine, Bspline, demons registration modules in Slicer to do coregistration of structural and DTI data of TBI.

Webpage of this event

Telephone meeting (Sept. 17th, 2012)

Andrei and Bo talked each other using skype, we discussed about the ongoing work of computation and visualization of cortical atrophy.

(NA-MIC) Summer Project Week 2012 (July 18-22)

We performed a systematic inspection of available UCLA TBI data. Problematic data sets were discussed and some additional data were obtained from UCLA for one data set. Bo and Andrei discussed to start working on two new TBI-related projects which may yield novel biomarkers of disease outcome.

Webpage of this event

(NA-MIC) All hands meeting (AHM) and Winter Project Week 2012 (January 9-13)

We got two new subjects from UCLA DBP. We processed the data and tested the current algorithm. We talked to our collaborators and learn the knowledge of pathology such as different lesions, necrosis and tissue compression. We also discussed the possible metrics we could provide to quantitatively analyze the tissue/lesion changes.

Webpage of this event

Telephone meeting (Sept. 9th, 2011)

During this telephone meeting, we discussed about the following problems.

- Integrate Mriwatcher to Slicer

- Manual segmentation and coregistration

- The SPIE submission

- The topological changes in TBI data

(NA-MIC) Summer Project Week 2011 (July 20-24)

We prepared the preliminary algorithm for this project week. During the project week, we applied the preliminary algorithm to current data and fixed some bugs in the code. Our collaborators helped us to validate our current results of the supervised segmentation. We got some comments and feedback from our collaborators, which are very important for us to improve the current algorithm.

Webpage of this event

(NA-MIC) All hands meeting (AHM) and Winter Project Week 2011 (January 10-14)

This is the first NA-MIC meeting between UCLA group and Utah group. During the project week, a lot of discussions have been done between the collaborators (UCLA and Utah groups) of this project. We worked together and answered each other's questions. Marcel introduced the purpose of all parameters of ABC and how these parameters would affect the final results. We discussed the possible user interaction we would use in the next step for better segmentation. Moreover, we knew more about the requirements of neurosurgeons and clinicians. Andrei pointed out the result of one subject was not good enough. Bo checked his results and redid the processing, and found the reason of the problem.

Webpage of this event

Literature

[1] Bo Wang, Marcel Prastawa, Andrei Irimia, Micah C. Chambers, Neda Sadeghi, Paul M. Vespa, John D. Van Horn, Guido Gerig, Analyzing Imaging Biomarkers for Traumatic Brain Injury Using 4D Modeling of Longitudinal MRI, In IEEE Proceedings of ISBI 2013, pp. (accepted). 2013.

[2] Bo Wang, Marcel Prastawa, Suyash P. Awate, Andrei Irimia, Micah C. Chambers, Paul M. Vespa, John D. Van Horn, Guido Gerig, Segmentation of Serial MRI of TBI patients using Personalized Atlas Construction and Topological Change Estimation, In Proceedings of IEEE ISBI 2012, pp. 1152--1155. 2012.

[3] Bo Wang, Marcel Prastawa, Andrei Irimia, Micah C. Chambers, Paul M. Vespa, John D. Van Horn, and Guido Gerig, A Patient-Specific Segmentation Framework for Longitudinal MR Images of Traumatic Brain Injury, Proc. SPIE 8314, 831402 (2012).

[4] Marcel Prastawa, Elizabeth Bullitt, Guido Gerig, Simulation of brain tumors in MR images, Medical Image Analysis 13 (2009), pp. 297-311, PMID: 19119055

[5] Marcel Prastawa and Guido Gerig, Brain Lesion Segmentation through Physical Model Estimation, International Symposium on Visual Computing ISVC 2008, LNCS, Vol. 5358, pp. 562--571.

[6] Marcel Prastawa, John H. Gilmore, Weili Lin, Guido Gerig, Automatic Segmentation of MR Images of the Developing Newborn Brain, Medical Image Analysis (MedIA). Vol 9, October 2005, pages 457-466

Key Investigators

- Utah: Bo Wang, Marcel Prastawa, Guido Gerig

- UCLA: John D. Van Horn, Andrei Irimia, Micah Chambers / Clinical partners: Paul Vespa, M.D., David Hovda, M.D.

References

Back to Utah 2 Algorithms